Assignment 1: https://cs231n.github.io/assignments2024/assignment1/

Assignment 1

This assignment is due on Friday, April 19 2024 at 11:59pm PST. Starter code containing Colab notebooks can be downloaded here. Setup Please familiarize yourself with the recommended workflow by watching the Colab walkthrough tutorial below: Note. Ensure y

cs231n.github.io

train data와 test data(X) 간의 유클리드 거리를 계산하여 비교

def compute_distances_two_loops(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

for j in range(num_train):

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

dists[i, j] = np.sqrt(np.sum(np.power(self.X_train[j] - X[i], 2)))

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return distsdef compute_distances_one_loop(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

dists[i] = np.sqrt(np.sum(np.power(self.X_train - X[i], 2), axis=1))

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return distsdef compute_distances_no_loops(self, X):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

dists = np.sqrt(

-2 * (X @ self.X_train.T) +

np.power(X, 2).sum(axis=1, keepdims=True) +

np.power(self.X_train, 2).sum(axis=1, keepdims=True).T

)

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return dists당연하게도 loop가 많을수록 계산시간이 늘어난다.(해당 실험은 코드상 구현되어있으나 생략)

train label에서 k개의 가장 가까운 sample을 찾은 후 가장 많이 나타난 class로 예측

def predict_labels(self, dists, k=1):

num_test = dists.shape[0]

y_pred = np.zeros(num_test)

for i in range(num_test):

closest_y = []

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

closest_y = self.y_train[dists[i].argsort()[:k]]

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

y_pred[i] = np.argmax(np.bincount(closest_y))

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return y_pred

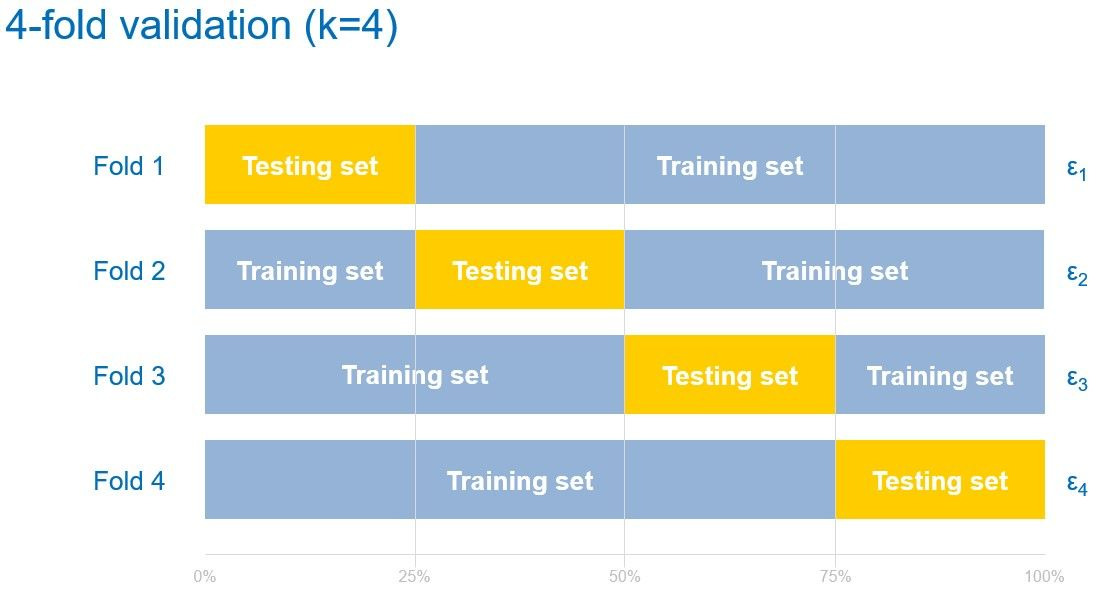

여기서 cross-validation을 통해 최적의 k를 찾는 실험도 있으나 이 또한 생략하였다.

참고 : https://github.com/mantasu/cs231n/blob/master/assignment1/knn.ipynb

'Computer Vision > CS231N' 카테고리의 다른 글

| [CS231N] Assignment 3 Q1. Image Captioning with Vanilla RNNs (0) | 2025.04.03 |

|---|